Thursday, August 27, 2009

Is Netflix' head in the clouds?

More recently, Netflix added "Watch Instantly" a smaller selection of movies that can be watched as video streams in a Microsoft Silverlight compatible browser. This viewing is unlimited and included in the DVD subscription price. With high quality content, both movies and older t.v. programming, Netflix has started to obsolete the traditional cable companies' offerings, especially "pay per view".

As a result I long ago canceled my expensive cable subscription and used the web to get my information and Netflix to deliver my movies.

Heaven.

Well, perhaps not for Netflix.

Consider for a moment what the effect of streaming movies means. Firstly Netflix looks like it is doing the technologically smart thing. Netflix wants to migrate more of its infrastructure to "the cloud" which makes perfect sense, especially for a rapidly growing company. Weightless bits on scalable, virtual servers, streamed across the internet to your screen without a human to direct the flow. Netflix presumably pays the content owner a license fee and has a server deliver the movie for minimal bandwidth costs. Sounds good until you realize that the "all you can eat" subscription model means that there can be a lot more consumption. Unemployed at home? Just queue up those movies and watch all day. Each view is costing Netflix something. Then consider your plan. Do you need to pay for the 3 DVD plan, or the 1 DVD plan with the same unlimited streaming? It is a recession right? Cut that plan cost to a minimum.

The logic seems to me that the member base will likely start to reduce their plan costs while retaining the unlimited streaming. It is likely that DVD watchers like me already are unprofitable for Netflix based on mail and handling costs. Unless streaming content is much more profitable, Netflix may find itself with rising license fee and bandwidth costs and declining revenue. Trying to raise subscription fees will be hard.

Now consider the internet carriers. So far Netflix has surfed the net neutral web with ease. But streaming content uses up bandwidth; a lot of bandwidth. We've already seen cable carriers start to restrict bandwidth rates and consumption. So far this has been justified by all those "illegal file sharers using BitTorrent". Netflix is clearly legitimate, but increased use of streaming could start to saturate the available bandwidth, especially with cable carriers. Then what? Either the carriers will start to demand extra money from Netflix to handle the traffic, or they will ask that from their customers. In the former, Netflix' profits are squeezed. In the latter, customers will start to get annoyed and may start to cancel their Netflix subscriptions if they cannot get the service they expect.

And what of the content providers? The more Netflix' business grows, the more vulnerable it is to demands from the content providers for higher fees. Without content, Netflix has no business, so it is supremely vulnerable to the demands of content owners. That can only mean cost will rise and those providers demand higher viewing fees.

My sense is that Netflix' business model will not scale. Fixed subscription revenue with variable consumption costs will tend to drive increased consumption, squeezing profitability, possibly into the red. It seems only a matter of time before something gives. Initially I would expect to see Netflix suffer declining subscriptions per member as members switch plans to reduce DVDs and opt for more streaming. Depending on the relative profitability of the DVD and streaming business, this may affect profitability positively or negatively. Finally the carriers and content providers will demand bigger pieces of the revenue pie and Netflix will be forced into accepting reduced margins or trying to raise rates and losing customers.

I hope I'm wrong. I really like my Netflix service. I hope the love affair won't be fleeting.

Sunday, May 10, 2009

Floating to Space

Which makes John Powell's "Floating to Space: The Airship to Orbit Program" a fascinating read. Powell asks the question, can we float to space using lighter than air vehicles? Initially this seems like a stupid question, eliciting an "of course not!" answer. But hold your horses. Powell makes a convincing case that the idea is feasible. His proposal is quite simple. First build a station that floats in the stratosphere, about 25 miles up, where the pressure is less than 1% of sea level. This station is manned, serviced and maintained using a large, V-shaped airship. To get to orbit, an even larger V-shaped airship, with "wings" about 1 mile long is used. This airship uses both buoyancy and dynamic lift to slowly rise to orbit, using low thrust engines achieve the necessary velocity. The idea is compelling and makes you want to write a check to help fund the concept.

At this point, Powell's aerospace company has built and flown test vehicles for both the floating station and the lower atmosphere airship. These seem to prove that this part of the program is feasible and could be scaled up. What has not been done is to test whether airship designs could fly from the station to orbit. This needs a lot of work to determine if ships this large can be stable and whether a propulsion device can be made to propel the vehicle to orbital velocity in the near vacuum of the upper atmosphere, around mach 25.

The sheer beauty of this idea is that even if Powell is wrong about getting all the way to space using the proposed approach, just the floating station component is a great idea - a platform that can be used for a host of commercial applications and tourism. While I think a true space station makes for a better space experience, I can't help but think a floating station that has a black sky above and gravity is going to make for a more popular destination than one with zero gravity: if you can live without the zero-G sex experience, which I assume our first commercial tourists to the ISS have.

Powell stresses that the airship approach is inherently much safer than the current rocket approach, which seems intuitively true, although actual operations would determine that. certainly the idea of traveling slowly but surely, with minimal discomfort is very appealing, even if several hours without a restroom on ascent to the station might be problematic, but not unfixable.

I thoroughly recommend this book and wish Powell all the luck in getting his vision realized.

Saturday, May 2, 2009

Thinking in Tweets

Despite the common assumption that our minds are only within our brains, they are in fact extended beyond them. A simple example; we use our fingers as memory aids for counting. Today, calculators have pretty much supplanted mental arithmetic within a generation.

The written word, primarily stored in books, has become the memory storage mechanism of choice for 600 years, even since the Gutenberg invented the printing press. Even a modest library accurately stores more than a brain can easily store. Most formal learning uses books, facilitating a transfer of knowledge from the frozen memories on the pages to our wetware. But books are more than just archival memory stores. They represent stored thoughts. Reading a novel replays those thoughts in your mind, so that you quickly become lost in the unfolding events, seeing, hearing and feeling the characters and their worlds. For people like me, books are a brain prothesis that we cannot live without, surrounding ourselves with them on bookshelves in our favorite domains.

But books are relatively immobile, referred to when available. Electronic search has both improved the scope of stored knowledge and its immediacy, especially when accessed by mobile devices such as smart phones. Wikipedia and google search have become part of the process of my thinking today. I can retrieve facts that I was aware of more accurately and access new facts, not quite in real time today. The immediacy of search makes it feel more like a part of my thinking, than using a book.

Which brings me back to Twitter and Tim's comment. Twitter, unlike most written text, is very simple - 140 characters conveying a simple thought, often attached to a URL which contains the "memory archive" connected to the thought. Tweets are small, often rapidly generated, and flow out into the noosphere to be picked up and passed on if worthy of interest. In some cases, rather than dying away, they are amplified and eventually returned to the sender with retweets. This is not unlike the signaling in neurons in your brain and how thoughts vie for attention.

So consider for a moment, that we are rapidly approaching a time when our thoughts will no longer be mostly constrained in our brains, occasionally to be frozen in print, but the stream of consciousness to be broadcast out into the web to mix with others' and return. When this stream is happening as fast as our thoughts, it will integrate with our minds as reading does today. But unlike books, which are frozen thoughts, these thoughts will be dynamic, constantly changing and modifying the thoughts in our wetware. Readers already know the loss they feel when cut off from their books. Today, many of us feel a little disorientated when disconnected from the net. In the future, our minds may partially reside in the net.

The question is, how far can this go? Do our minds increasingly expand into the net, and if so, what will it be like to be human?

Wednesday, April 22, 2009

Cloud computing on cell phones.

Now Technology Review reports on an experiment to use smaller, more efficient and far less power hungry computers for a farm. FAWN (Fast Array of Wimpy Nodes) is a server bank using the CPUs and memories from net book computers. For applications that just need to deliver small amounts of data, this approach turns out to be faster and cheaper than conventional servers. The reason being that the IO bottleneck is usually the disk reads. Thus using lots of small processors and DRAM memory for storage, the server arary can deliver more requests. The 2 papers to be published can be obtained from the author's website http://www.cs.cmu.edu/~dga/ or directly from here and here. Interesting reading.

Which leads me to a further idea. Why cannot we use smart phones as a cloud? Here were have the fastest growing market for CPUs and memory. They are ubiquitous and sit idle for much of the day. What if they could be linked to offer a huge cloud for small messages? The phones would need to update the system with their current ip address and host some sort of lightweight web server. Security would need to be handled in a sandbox. If a webserver could be installed as part of the OS, there could be a distributed cloud that encompassed the globe. Running in memory on low power CPUs, just like FAWN, but with billions of nodes. Small, cheap and out of control. Indeed.

Food for thought.

Thursday, April 16, 2009

Cloud Dynamics

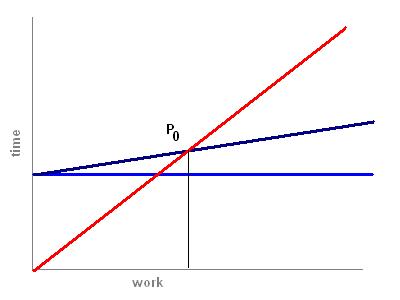

This is how I describe the dynamics of cloud computing. The first chart shows the model. The axes are computer workload vs. time to complete a task. The red line is for the client device, which is assumed to be less powerful than the server. The blue line is the latency of the connection to the server, the time it takes to deliver some data request and return a result. For a simple ping, this is of the order of 1/10 of a second or less, depending on traffic. Adding more data will increase the time as bandwidth is limited, which is why those Google maps can take forever to load on a smart phone over the cell network. The dark blue line is the total time for a request adding in the server's more powerful processing speed.

At point P0 is the break-even time. Tasks that take less work than this should be handled by the client device, while ones longer than this may be handled more effectively by the server, assuming the service wants to offer the shortest response time.

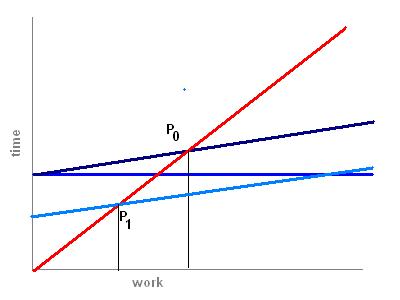

The next chart shows the effect of different clients. A PC is faster than a smart phone, so the break-even point is shifted to the left with a lower powered device. This means that more processing should be handled by the servers.

The third chart shows the effect of lower latency. If you could make the connection faster, again the server should take more load. In practice, the latency is mostly due to bandwidth limitations as data is moved between local device and server. Increasing bandwidth therefore makes servers more attractive.

The fourth chart shows two extremes. The vertical orange line is for a dumb terminal that cannot do any processing. This isn’t an obsolete idea. Ultra thin clients might want to have no processing at all, e.g. to display signs, or just may want to prevent any processing at all. You can do this with your browser by turning off Java and Javascript.

The horizontal green line assumes that the server is infinitely scalable, rather like Google’s search engine. In this case, almost any task is computable in a short time.

So what does this tell us about the future? Firstly we know that the fastest growing market is the mobile market, whether smart phones or the new net books. This suggests that the drive to increase server processing in the cloud is going to increase dramatically. Desktop PCs and workstations however, will not likely benefit from the cloud doing the processing, so we can expect big applications to remain installed on the local machine and using the cloud simply for delivery of updates to the software.The war over bandwidth pricing implies that the providers will effectively keep bandwidth low and rates high, shifting the break-even to more local processing and hence driving up the demand for more powerful local devices. This will tend to stifle the growth of ultra thin client devices if unchecked.

For me, the interesting story is what happens if we can build extremely powerful servers, able to deliver a lot of coordinated processing speed to a task. In this case is may make a lot of sense to offload processing to the server like we do with search. One way to think about this is with the familiar calculator widget. Simple 4 function or scientific calculators can easily compute on the client, so the calculator is an installed application.

But what if you want to compute hard stuff, maybe whether a large number is prime? Then it makes sense to use the server as the task can be made easily parallel across many machines and the result returned very quickly indeed. Now the calculator will have more functionality, doing lightweight calculations locally and the heavyweight ones on the server. This approach applies to a lot of tasks that are being thought of today and is driving the demand for both platforms and software languages that can make this very easy to achieve.

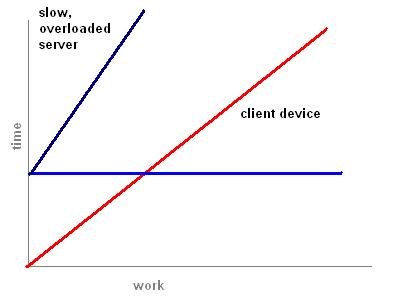

Finally, let's look at a case where the server is slower than the client. There is no break-even point because the client device is always faster than the server.

This scenario of the early days of the networked personal computer. Those were the days when networks were slow and servers, if they existed, were mostly simple file servers. It was also characterized by the period of extremely low bandwidth modems, preventing any reasonably fast computational turnarounds. In that world, it made sense to distribute software in boxes and install it on the client. This is still the dominant paradigm, even today, but it is clear that the advent of server farms and broadband, plus the demand for more lightweight mobile devices, will drive the cloud computing paradigm.

Stay tuned...

Wednesday, April 15, 2009

Courtesy of Marketing, Everything is Cloud Computing

And so it went at a talk I attended last night. The talk was entitled

The Business Value of Cloud Computing

and we had two engaging industry people talking about the cloud from opposite, but possibly complementary corners.

In the right corner was Paul Steinberg of Soonr.com. He spoke generically about SaaS (Software as a Service) and rolled out the usual suspects, GMail and Zoho Office as examples of this trend. Now I ask you, if GMail was delivered via a mainframe, would it suddenly lose it's cloud status? But conversely, if I throw up a web page on my ISP delivered website that has a rich client application in the page, would that be SaaS or cloud computing? At one point Paul said "SaaS is the same as cloud computing". Once the sales and marketing people get loose, you know the hype machine is in full swing.

In the left corner was Zorawar Biri Singh, from IBM. Biri presented us with some corporate IBM slides in a dizzying fashion, never allowing anything to be viewed in detail or explaining much. His role appeared to be to tell eveyone that IBM was in the cloud game and that their experience and dominance (in high end services?) would bring forth the goods in this arena. He had a couple of interesting details. Firstly, virtual machines were rapidly outstripping hardware machines. (Go out and buy VMWare NYSE: VMW ?) Secondly that while Amazon is selling CPU time for 10 cents an hour, he thought that the real cost might be closer to half a cent an hour. Do I hear 'supernormal profits' and IBM's rush to participate? One member of the audience questioned what sustainable advantage IBM might have in this game? Answer: "waffle, waffle, blah, blah, waffle". We've seen IBM do this before, last time it was SOA (service oriented architecture). IBM's solution was typically expensive and required a lot of IT and programmer time. Bottom like for Biri - cloud computing cuts costs. And IBM will be just the company to help you do that, no really.

So it looks like IBM is looking to build a rich server platform in the sky.

The problem with the cloud computing hype is that the customer doesn't care how the server parts work. In the same way I don't really know what fuels are delivered the power to my house. All I care about is that it is there and complain about it when the summer blackouts arrive. Most customers care about what it means for them. Is their client machine software going to be installed or web delivered? What feautures does it have? What does it cost? Where should teh data reside, and if the cloud, is it secure? Increasingly it will be "Can I get access to my software and data from any device, anywhere?". And increasingly that will mean mobile devices, from laptops to smart phone sized ones.

What few vendors talk about, is that the really power of the cloud is that software and data will increasingly be able to talk to each other out of their silos. That software will be able to draw on other software and data using a lot of resources to deliver really powerful information processing and delivery services.

Then the rain will fall on all of us.